ASP.NET Core 2.1 added some great features for making external API calls easier to manage when there are network failures, or the service itself might be down. Scott Hanselman has a great series of posts where he has updated to take advantage of these features.

Using Polly we can set up a retry policy, stating a number of retries to attempt and at what interval.

services.AddHttpClient<MyClient>().

AddTransientHttpErrorPolicy(policyBuilder => policyBuilder.CircuitBreakerAsync(

handledEventsAllowedBeforeBreaking: 2,

durationOfBreak: TimeSpan.FromMinutes(1)

));

As I was reading through the docs and making the changes to the ASP.NET Core workshop, it struck me to push this work to the cloud.

In our workshop app; when the page is requested, the server sends another request to the API to get the session data for example. Even though this is an async request, the application front end is still essentially waiting for the data to render the view.

Other problems we may have to handle:

- Is the API running or available

- Caching, do we need to hit this data again or on every request

- Latency, is the service or network slow

HttpClientFactory and Polly, in combination, account for most or all of these situations (except for caching).

I'd rather hit a reliable endpoint for the data just as I do for bootstrap.

Using a CDN

We already trust CDNs (Content Distribution Network) for many of our web resources. Frontend assets like css, javascript, fonts and of course images are very common to pull from. They have servers all over the world that are close to users and they can serve a web site's static content from servers close to users. So why not put your data there too, as long as it doesn't change that often.

I created a CDN in Azure in under 5 minutes for storing my images, here is the video of how to get that done.

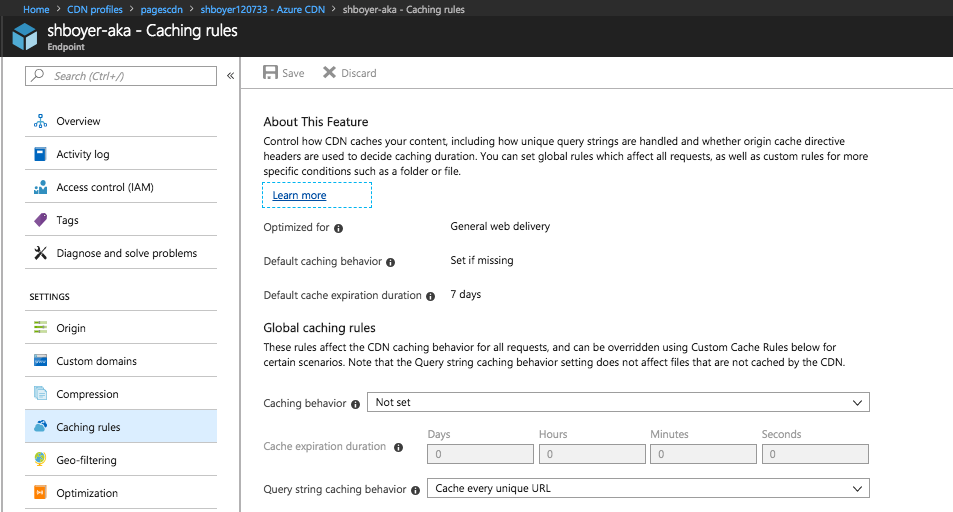

A couple settings for ensuring the caching is unique for the data the app is pulling. For my app I set the CDN to cache every unique URL.

See more about caching and how it works. Another advantage is Azure CDN also supports HTTP/2 by default with nothing to configure, this is another advantage for free.

Now that our CDN and settings are complete, we need to get the data there.

Using serverless Azure Functions to get and upload the data

I decided to use an Azure Timer Function and set it to run every 2 minutes.

In this example, I am using .NET Core and targeting the Azure Functions V2 Runtime.

CloudBlockBlob- the output stream[Blob("images/sessions.json", FileAccess.Write)]- binding for our storage account which is also where the CDN is set to point to.imagesis the folder or container.

using System;

using System.IO;

using System.Net.Http;

using System.Text;

using System.Threading.Tasks;

using Microsoft.Azure.WebJobs;

using Microsoft.Azure.WebJobs.Host;

using Microsoft.WindowsAzure.Storage.Blob;

namespace Workshop.Function

{

public static class cdn_cache_thing

{

private static HttpClient client = new HttpClient { BaseAddress = new Uri("https://aspnetcorews-backend.azurewebsites.net") };

[FunctionName("cdn-cache-thing")]

public static async Task Run(

[TimerTrigger("0 */2 * * * *")]TimerInfo myTimer,

[Blob("images/sessions.json", FileAccess.Write)] CloudBlockBlob sessionFile,

TraceWriter log)

{

using (var response = await client.GetAsync("/api/Sessions"))

{

var responseStream = await response.Content.ReadAsStreamAsync();

sessionFile.Properties.ContentType = "application/json";

await sessionFile.UploadFromStreamAsync(responseStream);

}

}

}

}

In a local.settings.json file, a connection string to our storage is set. In the portal, this connection is stored in the Application Settings.

{

"IsEncrypted": false,

"Values": {

"AzureWebJobsStorage": "DefaultEndpointsProtocol=https;AccountName=shboyer...

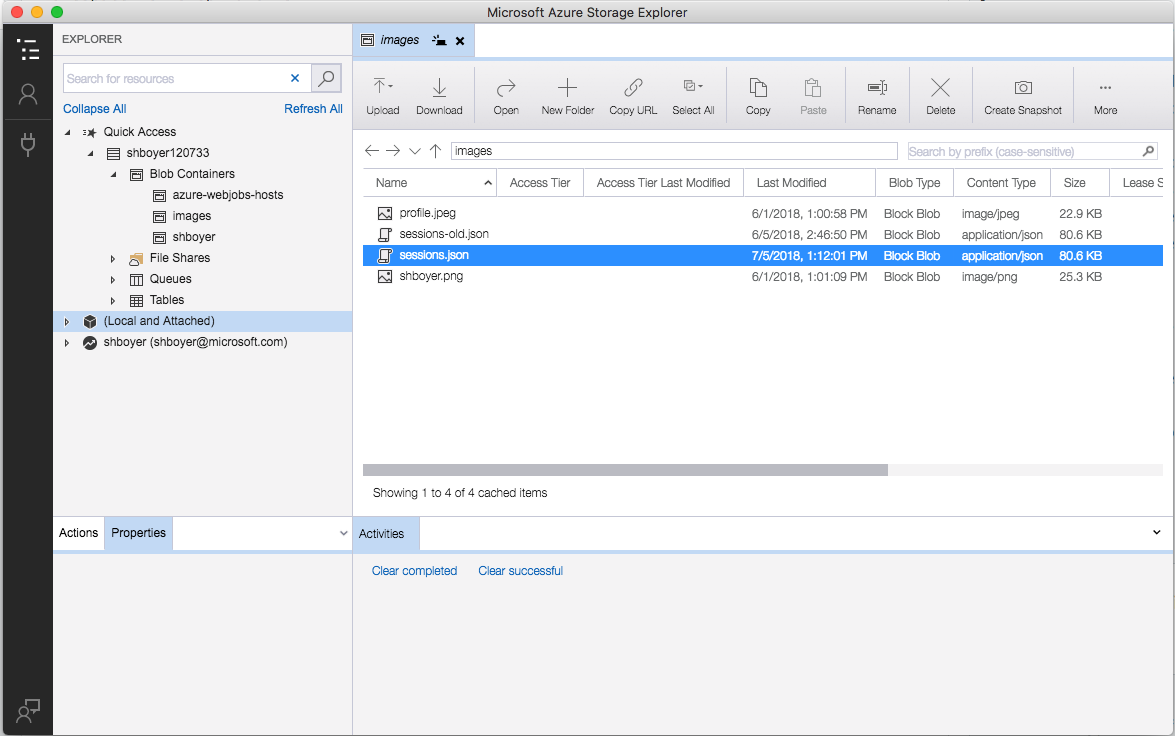

Every 2 minutes the event fires and the file is created in the storage container. Using Azure Storage Explorer, I can see the details of the file.

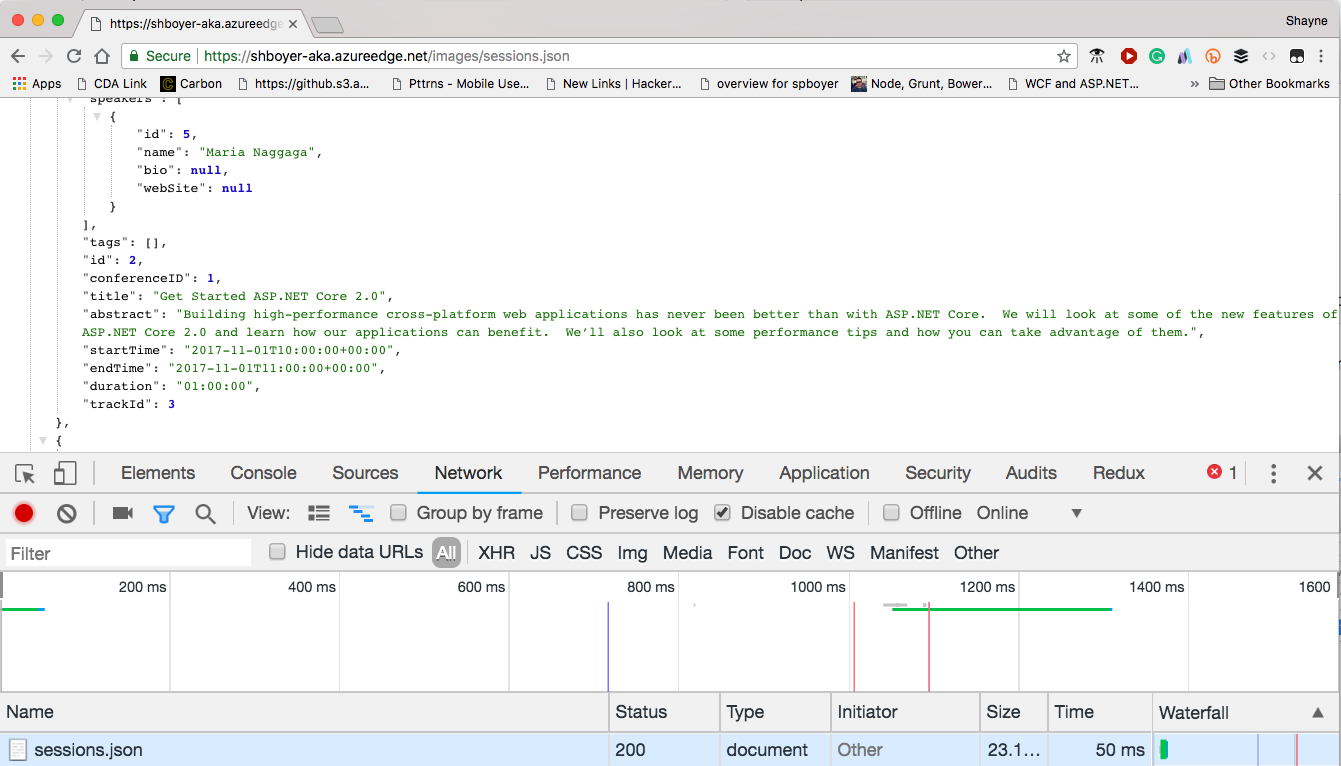

Using the CDN endpoint in the browser to get the data. It's 50ms on the first GET, ~7ms on each additional GET.

- Download Storage Explorer to browse your assets.

Thoughts?

This is a pattern that I've used in the past, with console apps, to push data to the edge and lighten the work on my backend servers.

I can control the cache, handle errors, deal with changes in any downstream services if the APIs change all while keeping my front end apps fast.